Main Page: Difference between revisions

(→POCs) |

|||

| (214 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[File: | [[File:Infocepo-picture.png|thumb|right|Discover cloud and AI on infocepo.com]] | ||

= infocepo.com – Cloud, AI & Labs = | |||

Welcome to the '''infocepo.com''' portal. | |||

This wiki is intended for system administrators, cloud engineers, developers, students, and enthusiasts who want to: | |||

* | * Understand modern architectures (Kubernetes, OpenStack, bare-metal, HPC…) | ||

* | * Deploy private AI assistants and productivity tools | ||

* Build hands-on labs to learn by doing | |||

* Prepare large-scale audits, migrations, and automations | |||

The goal: turn theory into '''reusable scripts, diagrams, and architectures'''. | |||

__TOC__ | |||

---- | |||

== | = Getting started quickly = | ||

== | == Recommended paths == | ||

; 1. Build a private AI assistant | |||

* Deploy a typical stack: '''Open WebUI + Ollama + GPU''' (H100 or consumer-grade GPU) | |||

* Add a chat model and a summarization model | |||

* Integrate internal data (RAG, embeddings) | |||

* | ; 2. Launch a Cloud lab | ||

* Create a small cluster (Kubernetes, OpenStack, or bare-metal) | |||

* Set up a deployment pipeline (Helm, Ansible, Terraform…) | |||

* Add an AI service (transcription, summarization, chatbot…) | |||

* | ; 3. Prepare an audit / migration | ||

* Inventory servers with '''ServerDiff.sh''' | |||

* Design the target architecture (cloud diagrams) | |||

* Automate the migration with reproducible scripts | |||

== Content overview == | |||

* | * '''AI guides & tools''' : assistants, models, evaluations, GPUs | ||

* '''Cloud & infrastructure''' : HA, HPC, web-scale, DevSecOps | |||

* '''Labs & scripts''' : audit, migration, automation | |||

* '''Comparison tables''' : Kubernetes vs OpenStack vs AWS vs bare-metal, etc. | |||

---- | |||

= future = | |||

[[File:Automation-full-vs-humans.png|thumb|right|The world after automation]] | |||

= AI Assistants & Cloud Tools = | |||

== AI Assistants == | |||

* | ; '''ChatGPT''' | ||

* https://chatgpt.com ChatGPT – Public conversational assistant, suited for exploration, writing, and rapid experimentation. | |||

*. | ; '''Self-hosted AI assistants''' | ||

* https://github.com/open-webui/open-webui Open WebUI + https://www.scaleway.com/en/h100-pcie-try-it-now/ H100 GPU + https://ollama.com Ollama | |||

: Typical stack for private assistants, self-hosted LLMs, and OpenAI-compatible APIs. | |||

* https://github.com/ynotopec/summarize Private summary – Local, fast, offline summarizer for your own data. | |||

== Development, models & tracking == | |||

; '''Discovering and tracking models''' | |||

* https://ollama.com/library LLM Trending – Model library (chat, code, RAG…) for local deployment. | |||

* https://huggingface.co/models Models Trending – Model marketplace, filterable by task, size, and license. | |||

* https://huggingface.co/models?pipeline_tag=image-text-to-text&sort=trending Img2txt Trending – Vision-language models (image → text). | |||

* https://huggingface.co/spaces/TIGER-Lab/GenAI-Arena Txt2img Evaluation – Image generation model comparisons. | |||

; '''Evaluation & benchmarks''' | |||

* https://lmarena.ai/leaderboard ChatBot Evaluation – Chatbot rankings (open-source and proprietary models). | |||

* https://huggingface.co/spaces/mteb/leaderboard Embedding Leaderboard – Benchmark of embedding models for RAG and semantic search. | |||

* https://ann-benchmarks.com Vectors DB Ranking – Vector database comparison (latency, memory, features). | |||

| | * https://top500.org/lists/green500/ HPC Efficiency – Ranking of the most energy-efficient supercomputers. | ||

; '''Development & fine-tuning tools''' | |||

* https://github.com/search?q=stars%3A%3E15000+forks%3A%3E1500+created%3A%3E2022-06-01&type=repositories&s=updated&o=desc Project Trending – Major recent open-source projects, sorted by popularity and activity. | |||

* https://github.com/hiyouga/LLaMA-Factory LLM Fine Tuning – Advanced framework for LLM fine-tuning (instruction tuning, LoRA, etc.). | |||

* https://www.perplexity.ai Perplexity AI – Advanced research and synthesis oriented as a “research copilot”. | |||

== AI Hardware & GPUs == | |||

; '''GPUs & accelerators''' | |||

* https://www.nvidia.com/en-us/data-center/h100/ NVIDIA H100 – Datacenter GPU for Kubernetes clusters and intensive AI workloads. | |||

* NVIDIA 5080 – Consumer GPU for lower-cost private LLM deployments. | |||

* https://www.mouser.fr/ProductDetail/BittWare/RS-GQ-GC1-0109?qs=ST9lo4GX8V2eGrFMeVQmFw%3D%3D GROQ LLM accelerator – Hardware accelerator dedicated to LLM inference. | |||

---- | |||

= Open models & internal endpoints = | |||

''(Last update: 2026-02-13)'' | |||

The models below correspond to '''logical endpoints''' (for example via a proxy or gateway), selected for specific use cases. | |||

{| class="wikitable" | |||

! Endpoint !! Description / Primary use case | |||

|- | |- | ||

| | | '''ai-chat''' || Based on '''gpt-oss-20b''' – General-purpose chat, good cost / quality balance. | ||

| | |||

|- | |- | ||

| | | '''ai-translate''' || gpt-oss-20b, temperature = 0 – Deterministic, reproducible translation (FR, EN, other languages). | ||

|- | |- | ||

| | | '''ai-summary''' || qwen3 – Model optimized for summarizing long texts (reports, documents, transcriptions). | ||

| | |||

|- | |- | ||

| '''ai-code''' || gpt-oss-20b – Code reasoning, explanation, and refactoring. | |||

|- | |||

| '''ai-code-completion''' || gpt-oss-20b – Fast code completion, designed for IDE auto-completion. | |||

|- | |||

| '''ai-parse''' || qwen3 – Structured extraction, log / JSON / table parsing. | |||

|- | |||

| '''ai-RAG-FR''' || qwen3 – RAG usage in French (business knowledge, internal FAQs). | |||

|- | |||

| '''gpt-oss-20b''' || Agentic tasks. | |||

|} | |} | ||

== Cloud | Usage idea: each endpoint is associated with one or more labs (chat, summary, parsing, RAG, etc.) in the Cloud Lab section. | ||

[[File: | |||

---- | |||

= News & Trends = | |||

* https://www.youtube.com/@lev-selector/videos Top AI News – Curated AI news videos. | |||

* https://betterprogramming.pub/color-your-captions-streamlining-live-transcriptions-with-diart-and-openais-whisper-6203350234ef Real-time transcription with Diart + Whisper – Example of real-time transcription with speaker detection. | |||

* https://github.com/openai-translator/openai-translator OpenAI Translator – Modern extension / client for LLM-assisted translation. | |||

* https://opensearch.org/docs/latest/search-plugins/conversational-search Opensearch with LLM – Conversational search based on LLMs and OpenSearch. | |||

---- | |||

= Training & Learning = | |||

* https://www.youtube.com/watch?v=4Bdc55j80l8 Transformers Explained – Introduction to Transformers, the core architecture of LLMs. | |||

* Hands-on labs, scripts, and real-world feedback in the [[LAB project|CLOUD LAB]] project below. | |||

---- | |||

= Cloud Lab & Audit Projects = | |||

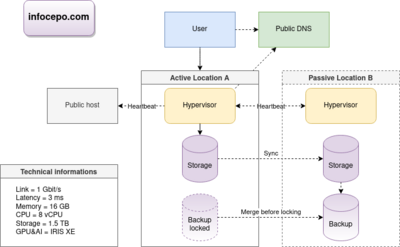

[[File:Infocepo.drawio.png|400px|Cloud Lab reference diagram]] | |||

The '''Cloud Lab''' provides reproducible scenarios: infrastructure audits, cloud migration, automation, high availability. | |||

== Audit project – Cloud Audit == | |||

; '''[[ServerDiff.sh]]''' | |||

Bash audit script to: | |||

* | * detect configuration drift, | ||

* compare multiple environments, | |||

* prepare a migration or remediation plan. | |||

* | |||

* | |||

== | == Example of Cloud migration == | ||

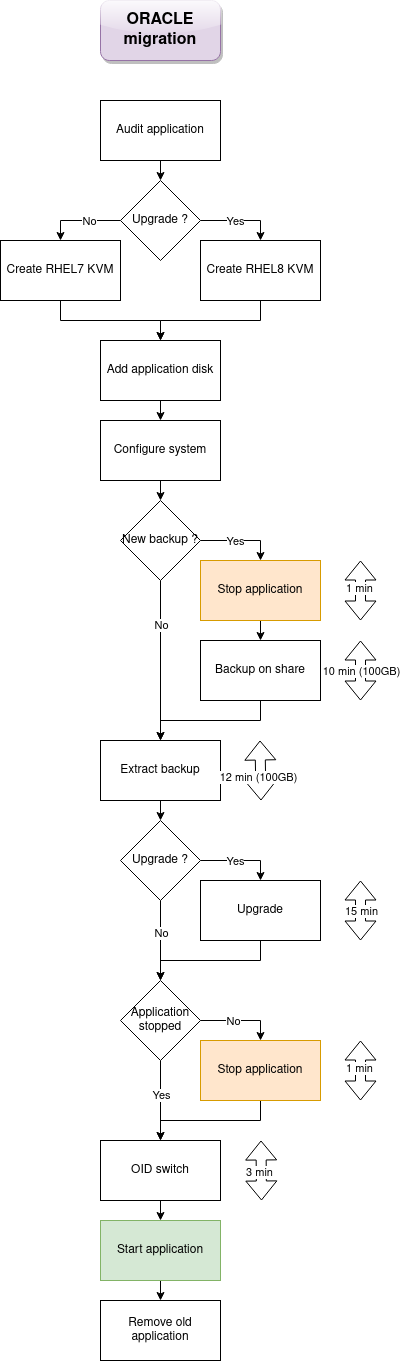

[[File:Diagram-migration-ORACLE-KVM-v2.drawio.png|400px|Cloud migration diagram]] | |||

Example: migration of virtual environments to a modernized cloud, including audit, architecture design, and automation. | |||

{| class="wikitable" | {| class="wikitable" | ||

! Task !! Description !! Duration (days) | |||

|- | |- | ||

| Infrastructure audit || 82 services, automated audit via '''ServerDiff.sh''' || 1.5 | |||

|- | |- | ||

| | | Cloud architecture diagram || Visual design and documentation || 1.5 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Compliance checks || 2 clouds, 6 hypervisors, 6 TB of RAM || 1.5 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Cloud platform installation || Deployment of main target environments || 1.0 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Stability verification || Early functional tests || 0.5 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Automation study || Identification and automation of repetitive tasks || 1.5 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Template development || 6 templates, 8 environments, 2 clouds / OS || 1.5 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Migration diagram || Illustration of the migration process || 1.0 | ||

| | |||

| | |||

| | |||

| | |||

|- | |- | ||

| | | Migration code writing || 138 lines (see '''MigrationApp.sh''') || 1.5 | ||

| | |- | ||

| | | Process stabilization || Validation that migration is reproducible || 1.5 | ||

| | |- | ||

| | | Cloud benchmarking || Performance comparison vs legacy infrastructure || 1.5 | ||

| | |- | ||

| | | Downtime tuning || Calculation of outage time per migration || 0.5 | ||

| | |- | ||

| VM loading || 82 VMs: OS, code, 2 IPs per VM || 0.1 | |||

|- | |||

! colspan=2 align="right"| '''Total''' !! 15 person-days | |||

|} | |} | ||

== | === Stability checks (minimal HA) === | ||

* | |||

{| class="wikitable" | |||

* | ! Action !! Expected result | ||

|- | |||

* | | Shutdown of one node || All services must automatically restart on remaining nodes. | ||

== High Availability | |- | ||

[[File:HA-REF.drawio.png| | | Simultaneous shutdown / restart of all nodes || All services must recover correctly after reboot. | ||

|} | |||

---- | |||

= Web Architecture & Best Practices = | |||

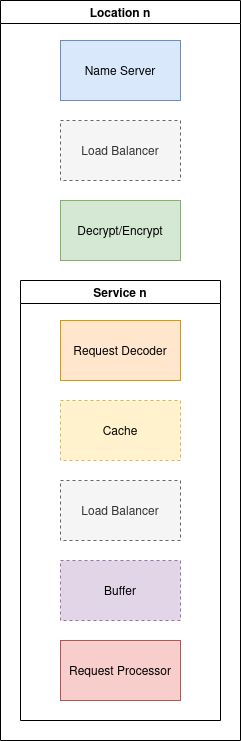

[[File:WebModelDiagram.drawio.png|400px|Reference web architecture]] | |||

Principles for designing scalable and portable web architectures: | |||

* Favor '''simple, modular, and flexible''' infrastructure. | |||

* Follow client location (GDNS or equivalent) to bring content closer. | |||

* Use network load balancers (LVS, IPVS) for scalability. | |||

* Systematically compare costs and beware of '''vendor lock-in'''. | |||

* TLS: | |||

** HAProxy for fast frontends, | |||

** Envoy for compatibility and advanced use cases (mTLS, HTTP/2/3). | |||

* Caching: | |||

** Varnish, Apache Traffic Server for large content volumes. | |||

* Favor open-source stacks and database caches (e.g., Memcached). | |||

* Use message queues, buffers, and quotas to smooth traffic spikes. | |||

* For complete architectures: | |||

** https://wikitech.wikimedia.org/wiki/Wikimedia_infrastructure Wikimedia Cloud Architecture | |||

** https://github.com/systemdesign42/system-design System Design GitHub | |||

---- | |||

= Comparison of major Cloud platforms = | |||

{| class="wikitable" | |||

! Feature !! Kubernetes !! OpenStack !! AWS !! Bare-metal !! HPC !! CRM !! oVirt | |||

|- | |||

| '''Deployment tools''' || Helm, YAML, ArgoCD, Juju || Ansible, Terraform, Juju || CloudFormation, Terraform, Juju || Ansible, Shell || xCAT, Clush || Ansible, Shell || Ansible, Python | |||

|- | |||

| '''Bootstrap method''' || API || API, PXE || API || PXE, IPMI || PXE, IPMI || PXE, IPMI || PXE, API | |||

|- | |||

| '''Router control''' || Kube-router || Router/Subnet API || Route Table / Subnet API || Linux, OVS || xCAT || Linux || API | |||

|- | |||

| '''Firewall control''' || Istio, NetworkPolicy || Security Groups API || Security Group API || Linux firewall || Linux firewall || Linux firewall || API | |||

|- | |||

| '''Network virtualization''' || VLAN, VxLAN, others || VPC || VPC || OVS, Linux || xCAT || Linux || API | |||

|- | |||

| '''DNS''' || CoreDNS || DNS-Nameserver || Route 53 || GDNS || xCAT || Linux || API | |||

|- | |||

| '''Load Balancer''' || Kube-proxy, LVS || LVS || Network Load Balancer || LVS || SLURM || Ldirectord || N/A | |||

|- | |||

| '''Storage options''' || Local, Cloud, PVC || Swift, Cinder, Nova || S3, EFS, EBS, FSx || Swift, XFS, EXT4, RAID10 || GPFS || SAN || NFS, SAN | |||

|} | |||

This table serves as a starting point for choosing the right stack based on: | |||

* Desired level of control (API vs bare-metal), | |||

* Context (on-prem, public cloud, HPC, CRM…), | |||

* Existing automation tooling. | |||

---- | |||

= Useful Cloud & IT links = | |||

* https://cloud.google.com/free/docs/aws-azure-gcp-service-comparison Cloud Providers Compared – AWS / Azure / GCP service mapping. | |||

* https://global-internet-map-2021.telegeography.com/ Global Internet Topology Map – Global Internet mapping. | |||

* https://landscape.cncf.io/?fullscreen=yes CNCF Official Landscape – Overview of cloud-native projects (CNCF). | |||

* https://wikitech.wikimedia.org/wiki/Wikimedia_infrastructure Wikimedia Cloud Wiki – Wikimedia infrastructure, real large-scale example. | |||

* https://openapm.io OpenAPM – SRE Tools – APM / observability tooling. | |||

* https://access.redhat.com/downloads/content/package-browser RedHat Package Browser – Package and version search at Red Hat. | |||

* https://www.silkhom.com/barometre-2021-des-tjm-dans-informatique-digital Barometer of IT freelance daily rates. | |||

* https://www.glassdoor.fr/salaire/Hays-Salaires-E10166.htm IT Salaries (Glassdoor) – Salary indicators. | |||

---- | |||

= Advanced: High Availability, HPC & DevSecOps = | |||

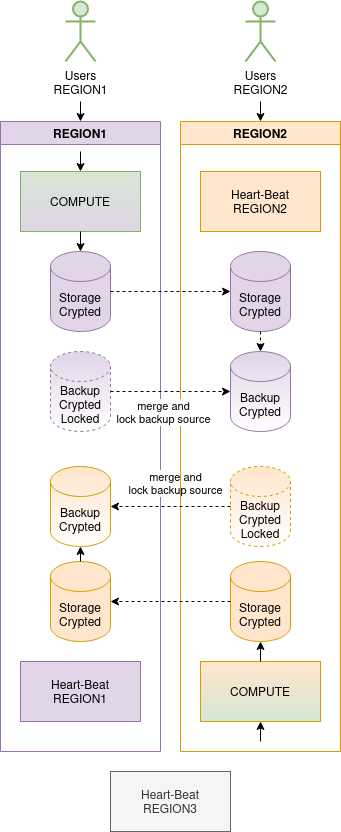

== High Availability with Corosync & Pacemaker == | |||

[[File:HA-REF.drawio.png|400px|HA cluster architecture]] | |||

Basic principles: | |||

* Multi-node or multi-site clusters for redundancy. | |||

* Use of IPMI for fencing, provisioning via PXE/NTP/DNS/TFTP. | |||

* For a 2-node cluster: | |||

– carefully sequence fencing to avoid split-brain, | |||

– 3 or more nodes remain recommended for production. | |||

=== Common resource patterns === | |||

* Multipath storage, LUNs, LVM, NFS. | |||

* User resources and application processes. | |||

* Virtual IPs, DNS records, network listeners. | |||

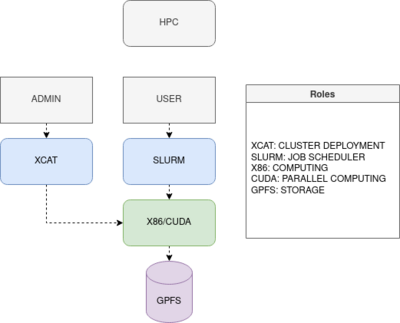

== HPC == | |||

[[File:HPC.drawio.png|400px|Overview of an HPC cluster]] | |||

* Job orchestration (SLURM or equivalent). | |||

* High-performance shared storage (GPFS, Lustre…). | |||

* Possible integration with AI workloads (large-scale training, GPU inference). | |||

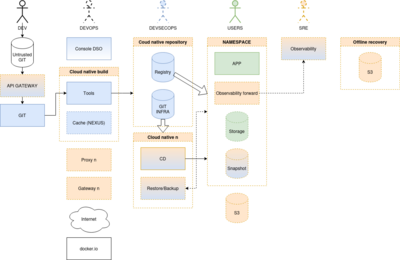

== DevSecOps == | |||

[[File:DSO-POC-V3.drawio.png|400px|DevSecOps reference design]] | |||

* CI/CD pipelines with built-in security checks (linting, SAST, DAST, SBOM). | |||

* Observability (logs, metrics, traces) integrated from design time. | |||

* Automated vulnerability scanning, secret management, policy-as-code. | |||

---- | |||

= | = About & Contributions = | ||

For more examples, scripts, diagrams, and feedback, see: | |||

* https://infocepo.com infocepo.com | |||

* | |||

Suggestions for corrections, diagram improvements, or new labs are welcome. | |||

This wiki aims to remain a '''living laboratory''' for AI, cloud, and automation. | |||

Latest revision as of 01:24, 13 February 2026

infocepo.com – Cloud, AI & Labs

Welcome to the infocepo.com portal.

This wiki is intended for system administrators, cloud engineers, developers, students, and enthusiasts who want to:

- Understand modern architectures (Kubernetes, OpenStack, bare-metal, HPC…)

- Deploy private AI assistants and productivity tools

- Build hands-on labs to learn by doing

- Prepare large-scale audits, migrations, and automations

The goal: turn theory into reusable scripts, diagrams, and architectures.

Getting started quickly

Recommended paths

- 1. Build a private AI assistant

- Deploy a typical stack: Open WebUI + Ollama + GPU (H100 or consumer-grade GPU)

- Add a chat model and a summarization model

- Integrate internal data (RAG, embeddings)

- 2. Launch a Cloud lab

- Create a small cluster (Kubernetes, OpenStack, or bare-metal)

- Set up a deployment pipeline (Helm, Ansible, Terraform…)

- Add an AI service (transcription, summarization, chatbot…)

- 3. Prepare an audit / migration

- Inventory servers with ServerDiff.sh

- Design the target architecture (cloud diagrams)

- Automate the migration with reproducible scripts

Content overview

- AI guides & tools : assistants, models, evaluations, GPUs

- Cloud & infrastructure : HA, HPC, web-scale, DevSecOps

- Labs & scripts : audit, migration, automation

- Comparison tables : Kubernetes vs OpenStack vs AWS vs bare-metal, etc.

future

AI Assistants & Cloud Tools

AI Assistants

- ChatGPT

- https://chatgpt.com ChatGPT – Public conversational assistant, suited for exploration, writing, and rapid experimentation.

- Self-hosted AI assistants

- https://github.com/open-webui/open-webui Open WebUI + https://www.scaleway.com/en/h100-pcie-try-it-now/ H100 GPU + https://ollama.com Ollama

- Typical stack for private assistants, self-hosted LLMs, and OpenAI-compatible APIs.

- https://github.com/ynotopec/summarize Private summary – Local, fast, offline summarizer for your own data.

Development, models & tracking

- Discovering and tracking models

- https://ollama.com/library LLM Trending – Model library (chat, code, RAG…) for local deployment.

- https://huggingface.co/models Models Trending – Model marketplace, filterable by task, size, and license.

- https://huggingface.co/models?pipeline_tag=image-text-to-text&sort=trending Img2txt Trending – Vision-language models (image → text).

- https://huggingface.co/spaces/TIGER-Lab/GenAI-Arena Txt2img Evaluation – Image generation model comparisons.

- Evaluation & benchmarks

- https://lmarena.ai/leaderboard ChatBot Evaluation – Chatbot rankings (open-source and proprietary models).

- https://huggingface.co/spaces/mteb/leaderboard Embedding Leaderboard – Benchmark of embedding models for RAG and semantic search.

- https://ann-benchmarks.com Vectors DB Ranking – Vector database comparison (latency, memory, features).

- https://top500.org/lists/green500/ HPC Efficiency – Ranking of the most energy-efficient supercomputers.

- Development & fine-tuning tools

- https://github.com/search?q=stars%3A%3E15000+forks%3A%3E1500+created%3A%3E2022-06-01&type=repositories&s=updated&o=desc Project Trending – Major recent open-source projects, sorted by popularity and activity.

- https://github.com/hiyouga/LLaMA-Factory LLM Fine Tuning – Advanced framework for LLM fine-tuning (instruction tuning, LoRA, etc.).

- https://www.perplexity.ai Perplexity AI – Advanced research and synthesis oriented as a “research copilot”.

AI Hardware & GPUs

- GPUs & accelerators

- https://www.nvidia.com/en-us/data-center/h100/ NVIDIA H100 – Datacenter GPU for Kubernetes clusters and intensive AI workloads.

- NVIDIA 5080 – Consumer GPU for lower-cost private LLM deployments.

- https://www.mouser.fr/ProductDetail/BittWare/RS-GQ-GC1-0109?qs=ST9lo4GX8V2eGrFMeVQmFw%3D%3D GROQ LLM accelerator – Hardware accelerator dedicated to LLM inference.

Open models & internal endpoints

(Last update: 2026-02-13)

The models below correspond to logical endpoints (for example via a proxy or gateway), selected for specific use cases.

| Endpoint | Description / Primary use case |

|---|---|

| ai-chat | Based on gpt-oss-20b – General-purpose chat, good cost / quality balance. |

| ai-translate | gpt-oss-20b, temperature = 0 – Deterministic, reproducible translation (FR, EN, other languages). |

| ai-summary | qwen3 – Model optimized for summarizing long texts (reports, documents, transcriptions). |

| ai-code | gpt-oss-20b – Code reasoning, explanation, and refactoring. |

| ai-code-completion | gpt-oss-20b – Fast code completion, designed for IDE auto-completion. |

| ai-parse | qwen3 – Structured extraction, log / JSON / table parsing. |

| ai-RAG-FR | qwen3 – RAG usage in French (business knowledge, internal FAQs). |

| gpt-oss-20b | Agentic tasks. |

Usage idea: each endpoint is associated with one or more labs (chat, summary, parsing, RAG, etc.) in the Cloud Lab section.

News & Trends

- https://www.youtube.com/@lev-selector/videos Top AI News – Curated AI news videos.

- https://betterprogramming.pub/color-your-captions-streamlining-live-transcriptions-with-diart-and-openais-whisper-6203350234ef Real-time transcription with Diart + Whisper – Example of real-time transcription with speaker detection.

- https://github.com/openai-translator/openai-translator OpenAI Translator – Modern extension / client for LLM-assisted translation.

- https://opensearch.org/docs/latest/search-plugins/conversational-search Opensearch with LLM – Conversational search based on LLMs and OpenSearch.

Training & Learning

- https://www.youtube.com/watch?v=4Bdc55j80l8 Transformers Explained – Introduction to Transformers, the core architecture of LLMs.

- Hands-on labs, scripts, and real-world feedback in the CLOUD LAB project below.

Cloud Lab & Audit Projects

The Cloud Lab provides reproducible scenarios: infrastructure audits, cloud migration, automation, high availability.

Audit project – Cloud Audit

Bash audit script to:

- detect configuration drift,

- compare multiple environments,

- prepare a migration or remediation plan.

Example of Cloud migration

Example: migration of virtual environments to a modernized cloud, including audit, architecture design, and automation.

| Task | Description | Duration (days) |

|---|---|---|

| Infrastructure audit | 82 services, automated audit via ServerDiff.sh | 1.5 |

| Cloud architecture diagram | Visual design and documentation | 1.5 |

| Compliance checks | 2 clouds, 6 hypervisors, 6 TB of RAM | 1.5 |

| Cloud platform installation | Deployment of main target environments | 1.0 |

| Stability verification | Early functional tests | 0.5 |

| Automation study | Identification and automation of repetitive tasks | 1.5 |

| Template development | 6 templates, 8 environments, 2 clouds / OS | 1.5 |

| Migration diagram | Illustration of the migration process | 1.0 |

| Migration code writing | 138 lines (see MigrationApp.sh) | 1.5 |

| Process stabilization | Validation that migration is reproducible | 1.5 |

| Cloud benchmarking | Performance comparison vs legacy infrastructure | 1.5 |

| Downtime tuning | Calculation of outage time per migration | 0.5 |

| VM loading | 82 VMs: OS, code, 2 IPs per VM | 0.1 |

| Total | 15 person-days | |

Stability checks (minimal HA)

| Action | Expected result |

|---|---|

| Shutdown of one node | All services must automatically restart on remaining nodes. |

| Simultaneous shutdown / restart of all nodes | All services must recover correctly after reboot. |

Web Architecture & Best Practices

Principles for designing scalable and portable web architectures:

- Favor simple, modular, and flexible infrastructure.

- Follow client location (GDNS or equivalent) to bring content closer.

- Use network load balancers (LVS, IPVS) for scalability.

- Systematically compare costs and beware of vendor lock-in.

- TLS:

- HAProxy for fast frontends,

- Envoy for compatibility and advanced use cases (mTLS, HTTP/2/3).

- Caching:

- Varnish, Apache Traffic Server for large content volumes.

- Favor open-source stacks and database caches (e.g., Memcached).

- Use message queues, buffers, and quotas to smooth traffic spikes.

- For complete architectures:

- https://wikitech.wikimedia.org/wiki/Wikimedia_infrastructure Wikimedia Cloud Architecture

- https://github.com/systemdesign42/system-design System Design GitHub

Comparison of major Cloud platforms

| Feature | Kubernetes | OpenStack | AWS | Bare-metal | HPC | CRM | oVirt |

|---|---|---|---|---|---|---|---|

| Deployment tools | Helm, YAML, ArgoCD, Juju | Ansible, Terraform, Juju | CloudFormation, Terraform, Juju | Ansible, Shell | xCAT, Clush | Ansible, Shell | Ansible, Python |

| Bootstrap method | API | API, PXE | API | PXE, IPMI | PXE, IPMI | PXE, IPMI | PXE, API |

| Router control | Kube-router | Router/Subnet API | Route Table / Subnet API | Linux, OVS | xCAT | Linux | API |

| Firewall control | Istio, NetworkPolicy | Security Groups API | Security Group API | Linux firewall | Linux firewall | Linux firewall | API |

| Network virtualization | VLAN, VxLAN, others | VPC | VPC | OVS, Linux | xCAT | Linux | API |

| DNS | CoreDNS | DNS-Nameserver | Route 53 | GDNS | xCAT | Linux | API |

| Load Balancer | Kube-proxy, LVS | LVS | Network Load Balancer | LVS | SLURM | Ldirectord | N/A |

| Storage options | Local, Cloud, PVC | Swift, Cinder, Nova | S3, EFS, EBS, FSx | Swift, XFS, EXT4, RAID10 | GPFS | SAN | NFS, SAN |

This table serves as a starting point for choosing the right stack based on:

- Desired level of control (API vs bare-metal),

- Context (on-prem, public cloud, HPC, CRM…),

- Existing automation tooling.

Useful Cloud & IT links

- https://cloud.google.com/free/docs/aws-azure-gcp-service-comparison Cloud Providers Compared – AWS / Azure / GCP service mapping.

- https://global-internet-map-2021.telegeography.com/ Global Internet Topology Map – Global Internet mapping.

- https://landscape.cncf.io/?fullscreen=yes CNCF Official Landscape – Overview of cloud-native projects (CNCF).

- https://wikitech.wikimedia.org/wiki/Wikimedia_infrastructure Wikimedia Cloud Wiki – Wikimedia infrastructure, real large-scale example.

- https://openapm.io OpenAPM – SRE Tools – APM / observability tooling.

- https://access.redhat.com/downloads/content/package-browser RedHat Package Browser – Package and version search at Red Hat.

- https://www.silkhom.com/barometre-2021-des-tjm-dans-informatique-digital Barometer of IT freelance daily rates.

- https://www.glassdoor.fr/salaire/Hays-Salaires-E10166.htm IT Salaries (Glassdoor) – Salary indicators.

Advanced: High Availability, HPC & DevSecOps

High Availability with Corosync & Pacemaker

Basic principles:

- Multi-node or multi-site clusters for redundancy.

- Use of IPMI for fencing, provisioning via PXE/NTP/DNS/TFTP.

- For a 2-node cluster:

– carefully sequence fencing to avoid split-brain, – 3 or more nodes remain recommended for production.

Common resource patterns

- Multipath storage, LUNs, LVM, NFS.

- User resources and application processes.

- Virtual IPs, DNS records, network listeners.

HPC

- Job orchestration (SLURM or equivalent).

- High-performance shared storage (GPFS, Lustre…).

- Possible integration with AI workloads (large-scale training, GPU inference).

DevSecOps

- CI/CD pipelines with built-in security checks (linting, SAST, DAST, SBOM).

- Observability (logs, metrics, traces) integrated from design time.

- Automated vulnerability scanning, secret management, policy-as-code.

About & Contributions

For more examples, scripts, diagrams, and feedback, see:

- https://infocepo.com infocepo.com

Suggestions for corrections, diagram improvements, or new labs are welcome. This wiki aims to remain a living laboratory for AI, cloud, and automation.